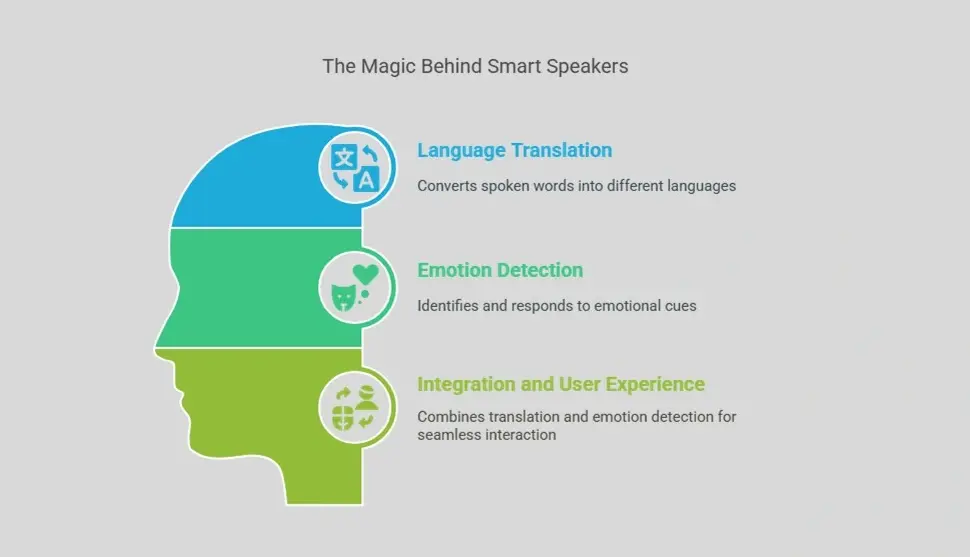

Smart speakers answers questions, play music, and even control smart devices with just a simple voice command. But behind the scenes, these devices are translating languages and detecting the emotional undertones in our voices. Let’s dive into how smart speakers translate language and detect emotions.

How Smart Speakers Translate Language and Detect Emotions

Language Translation in Smart Speakers

The journey of language translation in a smart speaker begins the moment you speak. The device first uses Automatic Speech Recognition (ASR) to transcribe your spoken words into text. Simultaneously, it identifies the language you’re using.

Once the language is identified, Natural Language Processing (NLP) takes center stage. NLP helps the device understand the words along with the meaning and intent behind them. This is achieved through Natural Language Understanding (NLU), which interprets your request, and Natural Language Generation (NLG), which crafts a response in the target language. The real magic happens with Neural Machine Translation (NMT), a sophisticated system powered by deep learning. NMT analyzes the structure and context of your sentence, then generates a translation that sounds natural. Text-to-Speech (TTS) technology converts the translated text back into spoken words, allowing you to have real-time conversations across language barriers.

While there may be a slight delay due to processing, the experience is remarkably seamless, opening up new possibilities for global communication.

Find how machine learning in smart speakers is changing user experience

Emotion Detection in Smart Speakers

But smart speakers don’t just understand what you say they’re also learning to understand how you feel. Emotion detection starts with analyzing the audio features of your voice, such as tone, pitch, volume, and speed. Advanced systems extract detailed patterns from your speech, using techniques like Mel Frequency Cepstral Coefficient (MFCC) analysis to capture subtle emotional cues.

These features are then fed into machine learning and deep learning models trained on vast datasets of emotional speech. The models learn to associate certain vocal patterns with emotions like happiness, sadness, anger, or excitement. Some smart speakers, such as the innovative MoodBox, use this information to tailor their responses, like playing upbeat music if you sound down, or responding with extra empathy if you seem frustrated. Tech giants like Google are also investing heavily in making their voice assistants more emotionally intelligent. Smart speakers may combine voice analysis with other data sources to achieve even more accurate emotion detection.

Integration and User Experience

The real power of smart speakers emerges when language translation and emotion detection work hand in hand. Imagine asking your smart speaker to translate a message into French while it senses your excitement and responds with enthusiasm. Or picture a device that recognizes your frustration when a command isn’t understood and offers a calming, helpful response. This integration creates a user experience that is not only efficient but also deeply empathetic.

Despite these advances, translating idioms, handling complex emotions, and reducing processing delays are the main challenges. However, the progress so far is remarkable, and the future promises even more seamless and emotionally aware interactions.

Conclusion

Smart speakers are blending cutting-edge technologies in speech recognition, NLP, machine translation, and emotion detection to make our daily interactions smoother and more meaningful. As these devices continue to evolve, they’re not just becoming smarter, they’re becoming better listeners and more understanding companions. Bridging language gaps and responding to our emotions in ways that were once the stuff of science fiction.